Concurrency and Parallelism in the Real World

Summary: Concurrency and parallelism are concepts that we make use of every day off of the computer. I give some real world examples and we analyze them for concurrency and parallelism.

What is the difference between concurrency and parallelism? There are a lot of explanations out there but most of them are more confusing than helpful. The thing is, you see concurrency and parallelism all the time in the world outside of the computer. When you learn what it is, you'll see it everywhere!

Ah, the Olympic games. So exciting! So much running!

Running

So much jumping!

Jumping

So much ... sitting?

Sitting

Hundreds of people compete for the 100-meter dash. But do they all run at the same time? No. How many run at the same time is based on how many lanes there are on the track. And what do those people who aren't running do when they're not running? They wait.

What is parallelism? How many people can run at the same time. What is concurrency? How many runners are competing in that event.

But how can more people compete than the number of running lanes? The answer is there are lots of people handling the bookkeeping --- tables of "heats" --- that decide who runs when. If you follow the table, everybody runs at some point and a winner can be chosen.

On a computer, the parallelism is equal to the number of cpus or cores. Four cores means you can do four things at the same time. But there's often much more work tasks than cpus. So you need some kind of concurrency system to keep track of all of the work.

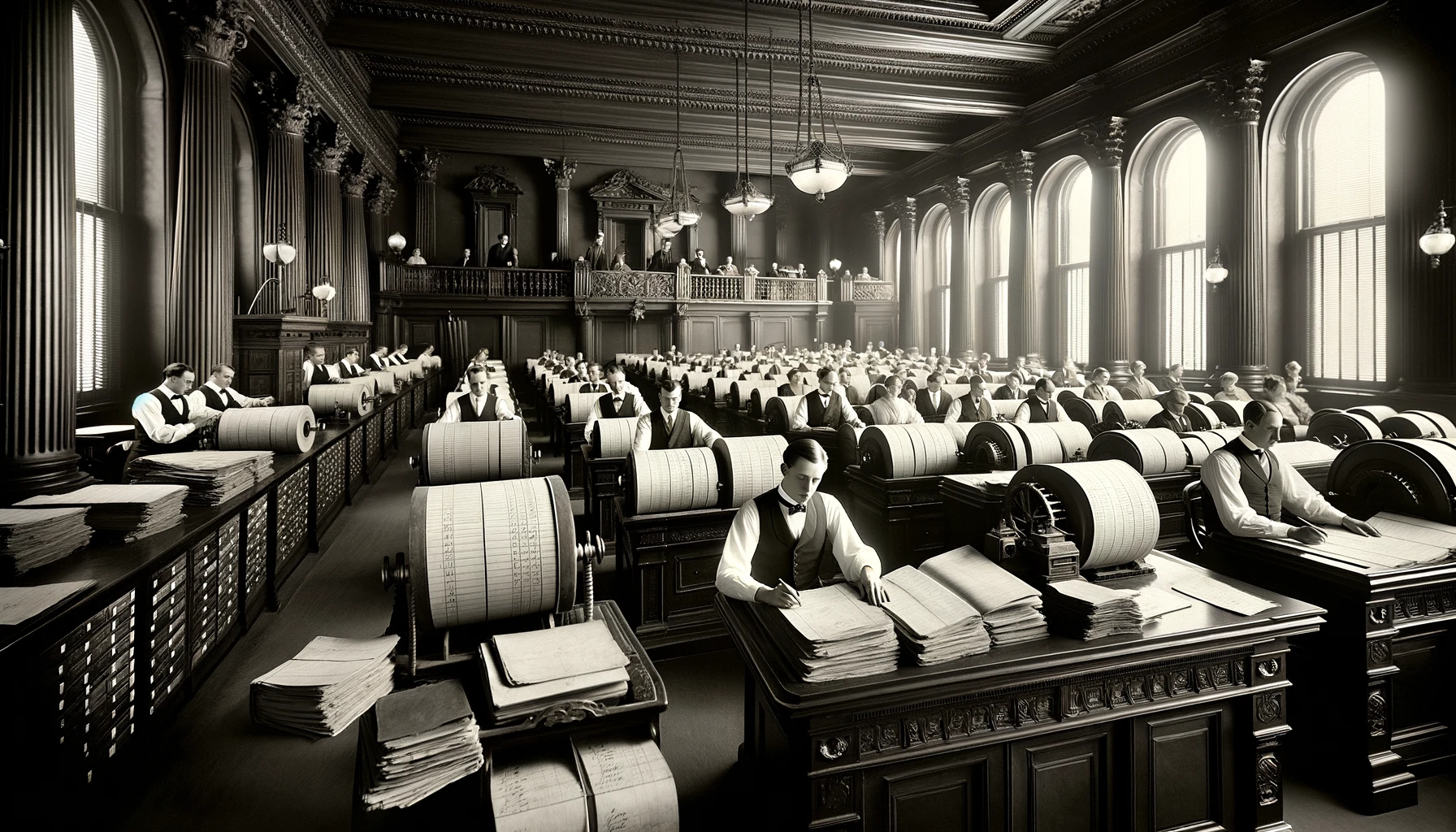

Let's look at another example. Imagine a bank from 1900, where there are no computers. All of the work is done by people. How much parallelism is there? How much work can be done at the same time? The answer is simple: it's the number of bankers.

Bankers

But there are many more transactions than bankers. People are coming to the bank to deposit, to transfer, to withdraw. There are peak times when it seems like the line is endless. And there are other times when the bank is quiet. What makes it possible to let six bankers handle hundreds of transactions?

In the bank's case, the answer is usually queues. Queues are a concurrency mechanism that "store" work to be done later. Work is pulled off of the queue by the banker, processed, and usually put onto another queue. At any given time, most of the work is waiting.

Queue

Queues are very powerful because they can detach the producer from the consumer. They can each work at their own pace.

Queues are everywhere in the real world. People line up for bathrooms. In this picture, what's the parallelism? How many people can go at the same time?

Potties

A food booth at a festival is a great display of parallelism and concurrency. There are many different jobs to be done. Usually one person handles one job. They communicate and pass work around. People wait in queues to order, then they wait in another queue to get their food. Sometimes, all of the cooks do all jobs. They are constantly switching. That can work, too.

Food booth

Languages like Clojure give you the tools you need to build little kitchens like that. Different threads working, communicating, and passing work around.

Some of the tools Clojure gives you are threads, atoms, refs, agents, core.async, persistent data structures (including persistent queues), and futures. It can be fun to figure out ways to arrange them to get the job done.

Here's one of the reasons parallelism and concurrency are confusing: modern operating systems and languages simulate parallelism with concurrency. You can have many more programs running than you have cpus. Internally, the OS is using a concurrency system to switch quickly between different programs.

If you ask for a thousand threads in your program, the OS will give them to you. Ostensibly threads are a way to get parallelism, but really they're just another concurrency primitive. Each defines work (in terms of code) that is queued up for the CPUs to work on.

The next time you see people working together, ask yourself where the parallelism is and where is the concurrency. Parallelism is easy: it's the number of workers who can work at the same time. The concurrency is harder to spot, but often you'll see papers or charts, work lined up, buffers, and other ways to keep track of the work and push it through the process.